As AI becomes central to defense, aerospace, and other high-compliance sectors, the question isn’t just what model to use — it’s how to deploy it. For mission-driven organizations, the architecture behind AI matters as much as its capabilities.

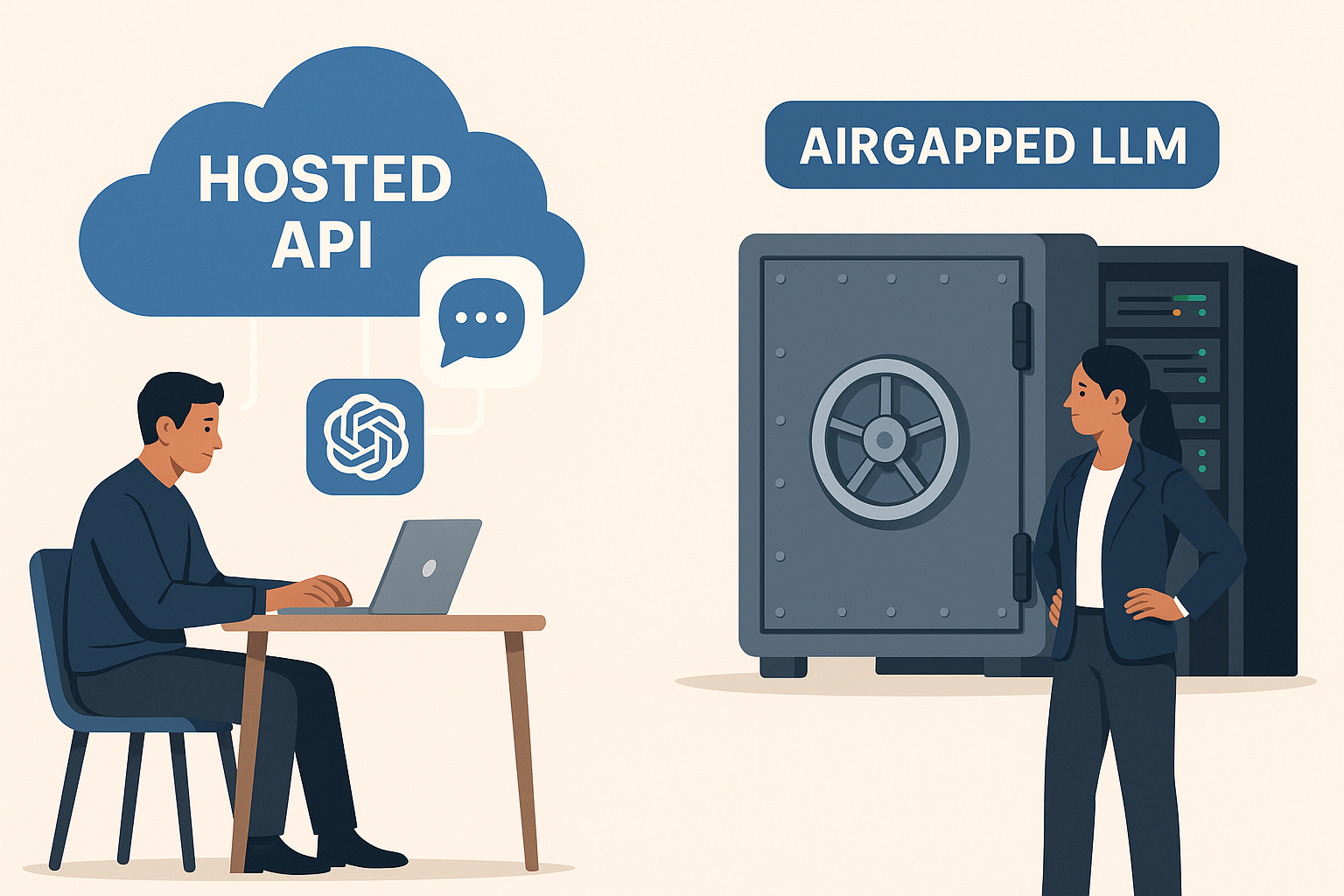

Should you tap into hosted APIs from OpenAI or Anthropic? Or should you bring your own models in-house, within secure, airgapped environments?

Let’s break it down.

The Case for Hosted AI APIs:

Hosted APIs like ChatGPT or Claude offer plug-and-play access to powerful models, often with:

Low friction to deploy

Regular updates and improvements

Minimal infrastructure overhead

For teams building prototypes or running low-sensitivity tasks (like summarizing public documents or creating marketing drafts), hosted APIs are often a great fit.

But here’s the tradeoff:

You’re trusting third-party providers with your prompts, data, and results. For industries with classified, proprietary, or sensitive workflows — that’s a dealbreaker.

Why Self-Hosted, Airgapped LLMs Are Rising:

More organizations are shifting toward self-contained LLM stacks for:

Security: No external transmission of data

Control: Tailored fine-tuning and access policies

Speed: Lower latency for local workloads

Compliance: Alignment with internal data handling protocols

Platforms like Llama 3, Mistral, and open-weight variants of Falcon or Phi are enabling this shift — supported by tools like NVIDIA’s NeMo and Hugging Face Transformers for deployment.

Choosing What’s Right for You:

Ask yourself:

Are your AI tasks dealing with regulated or internal data?

Is uptime and latency a critical factor?

Do you need traceability and auditability?

If the answer is yes, airgapped AI may be worth the initial infrastructure lift.

Conclusion:

Hosted APIs are a great starting point — but for long-term, secure AI operations, the future is shifting toward sovereign, private, in-house intelligence.

Start with what’s easy. But plan for what’s secure.